Virtual Production

Virtual Production

RESOURCE AS COMPOSITING

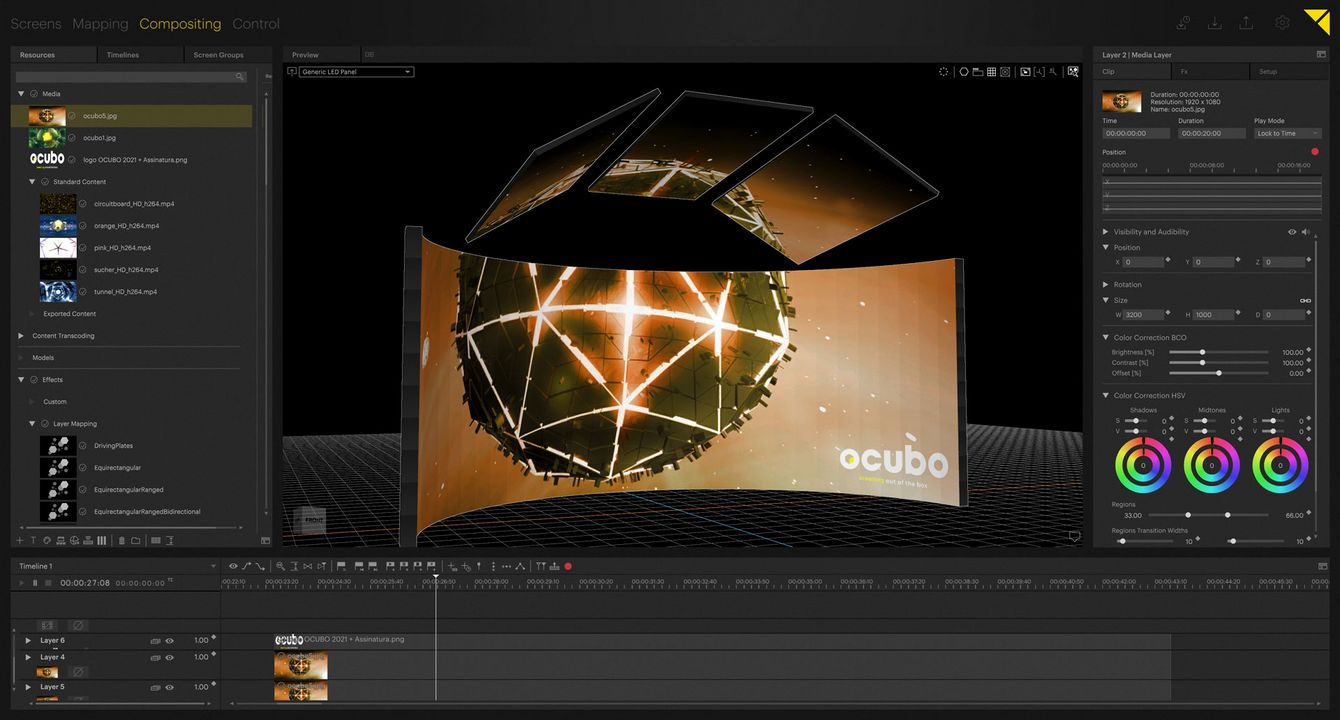

„Resource as Compositing“ is a workflow feature that empowers PIXERA users to employ and interact with 3D worlds originating with other software environments (e.g. Unreal, Notch) in a simple and extremely effective way. PIXERA users have had the opportunity to „dive into“ virtual screens since version 1.0, where they would find a full 3D compositing space, that does not only allow for the implementation of video content, but of textured 3D objects as well.

With version 1.8, resources that contain their own 3D worlds have been integrated in a way so that their compositing is seamlessly combined with PIXERA. Navigating inside the preview, editing perspectives inside the virtual world and a host of other functionalities all happen by using familiar PIXERA tools. It‘s even possible to place 3D objects and videos from PIXERA inside a compositing originating with one of the aforementioned resources. The ability to handle 3D scenes from different engines as compositing lays the foundation for using these resources as part of PIXERA based productions in a user-friendly and truly effective way.

What do we mean when using terms like XR/AR/VR?

Extended Reality (XR) is an umbrella term that encompasses all available technologies, both software and hardware based, that can be combined to extend or augment one‘s interactions with reality. Depending on what approach one follows to realise a certain project, other terms like Augmented Reality (AR), Virtual Reality (VR) or Mixed Reality (MR) might be more appropriate.

Why should I care about "XR Stages" etc.?

Whilst it is true that the recent covid-19 pandemic is without doubt one of the major reasons that XR stages and related broadcast setups suddenly have become extremely popular household names, the rapid technological developments of the last few years in the realm of real-time graphics, unprecedented hardware processing power and the exponential global growth of 3D software engine usage are equally responsible for the meteoric rise of XR applications. Building an impressive and fully immersive XR stage setup is without doubt a job for tech professionals, but it has never been easier to create inspiring live production environments with the potential to positively impact your particular audience.

Impressions

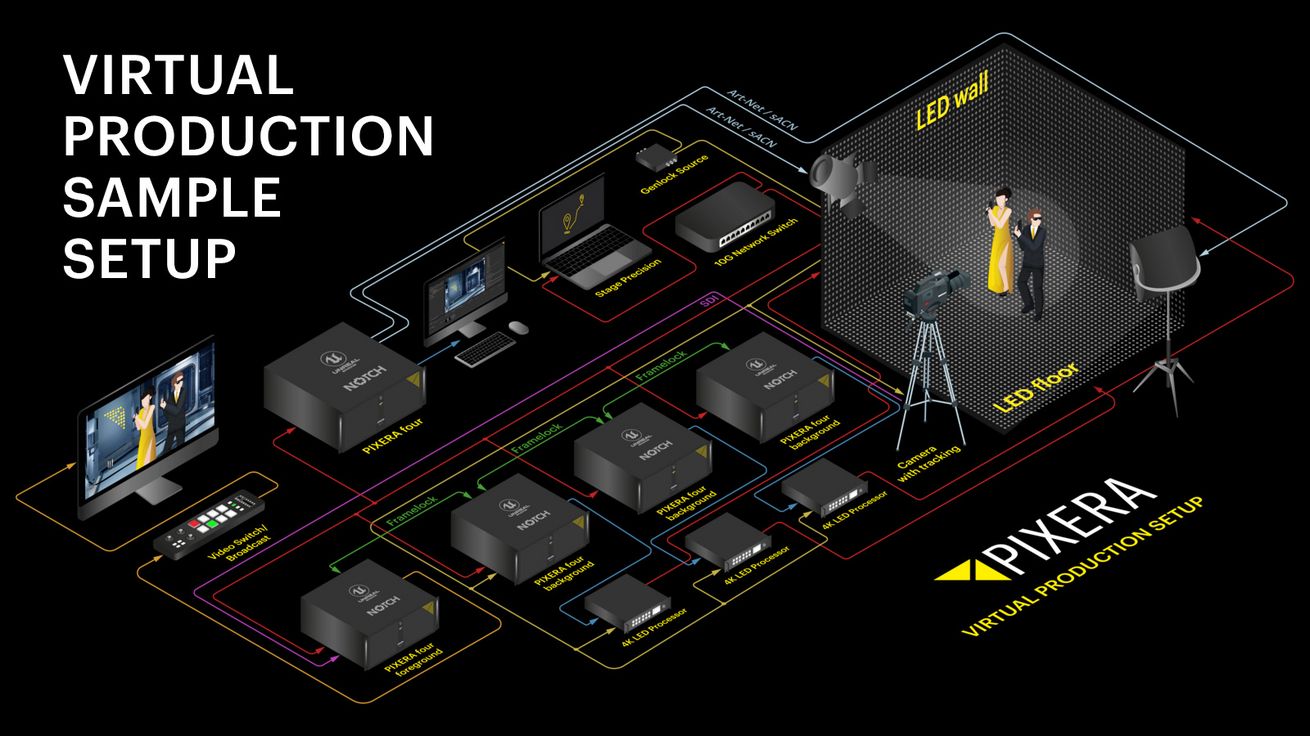

VIRTUAL PRODUCTION Sample SETUP (UNREAL)

- One PIXERA four director is needed as a master for your preview.

- Each one of the LED walls needs its own PIXERA four for the background and back projected camera frustum.

- UNREAL (plug-in) renders on PIXERA four as a “resource-as-compositing” integration.

- For mixing the camera live signal and foreground, a dedicated PIXERA four server with a live capture card is necessary.

- The mixed output can then be fed to your video switcher.

- Stage Precision is used to feed tracking data (Mosys, Stype, Optitrack,...) via direct API to PIXERA.

- Genlock has to be connected to your camera, tracking system, LED processors and one of the PIXERA clients.

- Framelock has to be used to sync the client servers.

UNREAL CONTENT PLUG-IN

By using the "Resource as Compositing" feature, Unreal scenes can be displayed within PIXERA. In addition to this, AV Stumpfl developed a dedicated plug-in for the Unreal Engine that makes it possible to edit scene properties directly from PIXERA. The plug-in can be used to e.g. move Unreal objects or adjust lighting settings.

The scene properties appear in PIXERA as part of a layer onto which the resource has been placed. This way, PIXERA users can use timeline tools to manipulate the virtual worlds before them. One could summarise the possibilites within PIXERA in this regard as creating a powerful and integrated editing environment, that allows for concentrating on the ultimate project/show experience to be created.

Unreal Integration

The EPIC MegaGrant is an important catalyst for our work on Unreal Engine integration in the context of virtual productions. It supports efforts to achieve low-level integration of render results as well as to give users the ability to manage Unreal worlds with PIXERA's digital production workflows

Color Correction

It is up to the users themselves to decide how and at what depth they want to engage with the topic of color management from each of PIXERA's main interface tabs.

Different sub-menus offer a great variety of options when it comes to PIXERA’s internal Color Space settings.

One example of this is OpenColorIO (OCIO) support, where users can upload OCIO configuration files that then determine PIXERA’s color transforms.

It’s also possible to implement ACES workflow with PIXERA too. At a basic level, color changes can be made on the available timeline layers or directly on the outputs themselves, using a variety of easily accessible menus and/or a traditional colour picker. The more creative color controls offer the option to add LUTs (Lookup Tables) to resources and outputs.

HDR settings make it possible to change to HDR clipping and compensation values.

The ability to write and implement one’s own OpenGL shaders is another highlight of PIXERA in the context of color management and correction.

Downloads

If you have specific requests or questions regarding virtual production, don't hesitate to contact us:

virtualproduction@PIXERA.one

We reserve the right to make modifications in the interest of technical progress without further notice.